Orhan Gazi Yalçın

5 Min Read

Generating explanations from black-box models using model properties, local logical representations, and global logical representations

Quick Recap: XAI and NSC

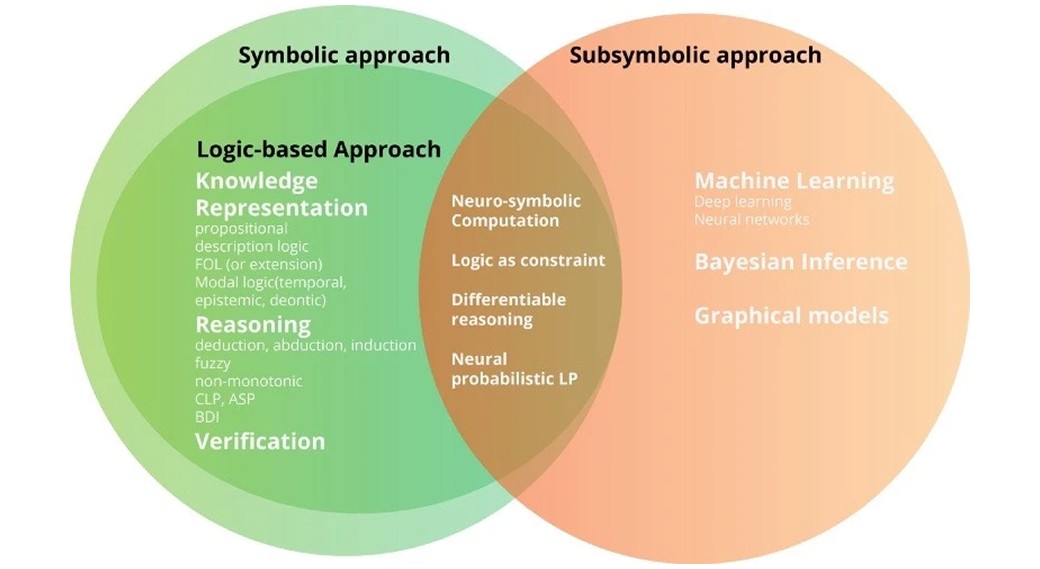

Explainable AI (XAI) deals with developing AI models that are inherently easier to understand for humans, including users, developers, policymakers, and law enforcement. Neuro-Symbolic Computing (NSC) deals with combining sub-symbolic learning algorithms with symbolic reasoning methods. Therefore, we can assert that Neuro Symbolic Computing is a sub-field under Explainable AI. NSC is also one of the most applicable approaches since it relies on combining existing methods and models.

Explainability is the ability to meaningfully describe things in a human language. In other words, it is the possibility to map raw information (data) to a meaningful symbolic representation for humans (e.g. an English text)

By extracting symbols out of sub-symbols, we can make these sub-symbols explainable. Both XAI and NSC are trying to make sub-symbolic systems more explainable. NSC is more about mapping sub-symbols to symbols, explainability by design through logic: symbolic reasoning over sub-symbolic learned representations. XAI is a less specific paradigm, and it is more about explainability in all its nuances, even if it is wrapped around unexplainable models. If extracting symbols out of sub-symbols implies explainability, then XAI includes NSC.

Let’s see these NSC with an example:

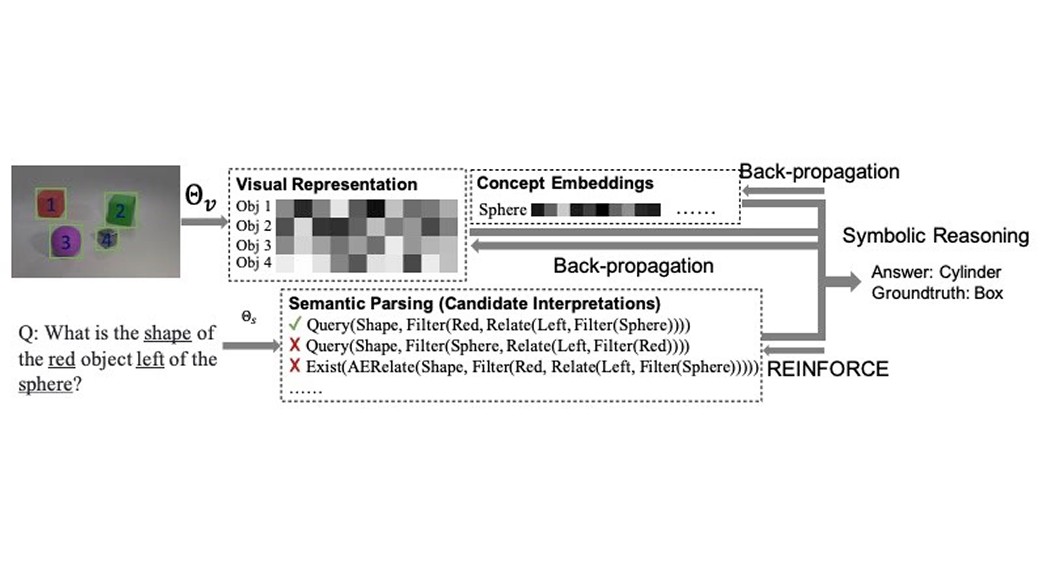

Neuro-Symbolic Concept Learner

Mao et al. propose a new NSC model, the Neuro-Symbolic Concept Learner, which follows the following steps:

An image classifier learns to extract the sub-symbolic (numerical) representations from image or text segments.

Then, every sub-symbolic representation is associated with a human-understandable symbol.

Then, a symbolic reasoner checks the embedding similarities of symbol representations

The training continues until the accuracy of the output of the reasoner is maximized by updating the representations.

White Box vs. Black Box Models

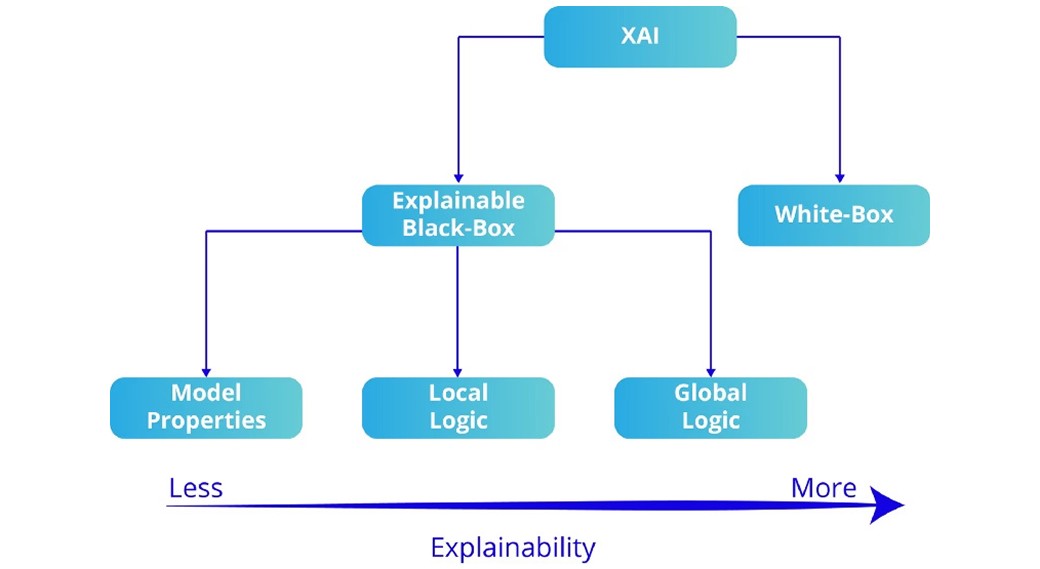

An AI model can be (i) white-box or (ii) black-box.

A white-box model is explainable by design. Therefore, it does not require additional capabilities to be explainable.

A black-box model is not explainable by itself. Therefore, to make a black-box model explainable, we have to adopt several techniques to extract explanations from the model's inner logic or outputs.

Black-box models can be made explainable with

Model Properties: Demonstrating specific properties of the model or its predictions, such as (a) sensitivity to attribute changes or (b) identification of components of the model (e.g., neurons or nodes) responsible for a given decision.

Local Logic: A representation of the inner logic behind a single decision or prediction.

Global Logic: A representation of the entire inner logic.

Therefore, here is the diagram showing the sub-categories of AI models in terms of their explainability:

Rule-based Explainability vs. Case-based Explainability

Apart from the logical differentiation of the explainable models, we also identify two common types of explanations that all of the abovementioned models can be adapted to output explanations:

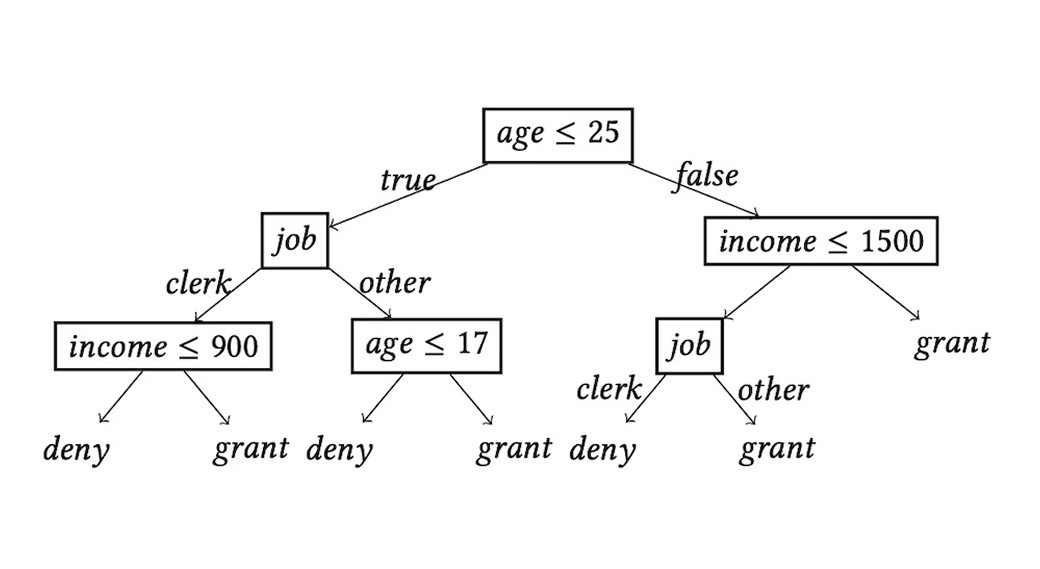

Rule-based Explanations: Rule-based explainability relies on generating a “set of formal logical rules formulating the inner logic of a given model.

Case-based Explanations: Rule-based explainability relies on providing valuable input-output pairs (both positive and negative) to provide an intuition of the inner logic of the model. Case-based explanations rely on the human ability to infer logic from these pairs.

Rule-Based vs Case-Based Learning Algorithm Comparison Example:

Let’s say our model needs to learn a recipe for how to make an apple pie. We have the recipes for blueberry pie, cheesecake, shepherd’s pie, and a plain cake recipe. While the rule-based learning approach tries to come up with a set of general rules for making all types of desserts (i.e., eager approach), the case-based learning approach generalizes the information exactly as needed to cover particular tasks. Therefore, it would look for the most similar desserts to apple pie in the available data. Then, it would try to customize with small variations on similar recipes.

XAI: Designing White-Box Models

Including rule-based and case-based learning systems, we have four main classes of white-box designs:

Hand-crafted expert systems;

Rule-based learning systems: algorithms to learn logical rules from data such as inductive logic programming, decision trees, etc.;

Case-based learning systems: algorithms based on case-based reasoning. They make use of examples, cases, precedents, and/or counter-examples to explain system outputs; and

Embedded symbols & extraction systems: more biology-inspired algorithms such as Neuro-Symbolic Computing.

In the upcoming parts of this series, we will have hands-on examples of these methods.

Final Notes

In this post, we:

1 — Briefly covered the differences and similarities between XAI and NSC;

2 — Defined and compared black-box and white-box models;

3 — Approaches to make black-box models explainable (model properties, local logic, global logic);

4 — Compared Rule-based Explanations and Case-based Explanations and exemplified them.

In the next post, we will cover the libraries and technologies available in the market for explainability work. We will use some of these libraries to extract explanations from black and white-box models.

If you like this article, you can check out our other articles.